Keeping Your Data Safe: An Introduction to Data-at-Rest Encryption in Percona XtraDB Cluster.

In the first part of this blog post, we learned how to enable GCache and Record-Set cache encryption in Percona XtraDB Cluster. This part will explore the details of the implementation to understand what happens behind the scenes.

How does it work internally?

Record Set cache and GCache expose the API visible by the client application (Galera library) as the memory allocator. The client simply asks for the buffer of a given size and, as a result, gets the pointer. Then, he can do anything with that memory.

Mmap is used behind the scenes. So, the file is created on disk and mmap-ed to the process’s virtual memory. The allocator allocates the requested amount of memory and returns it to the client.

As a result of such an approach, the application sees all the memory it allocates as in RAM while it is an on-disk file. An additional consequence is that the application performs no file I/O operations anywhere.

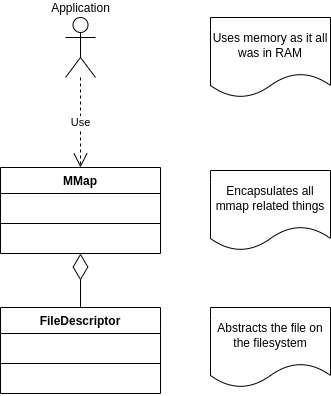

The diagram below shows the simplified view of relations of internal parts:

FileDescriptor abstracts a file on the disk. MMap abstracts the mapping of the file to the process’s virtual memory. As the file is directly mmapped to the process’s virtual memory, no file I/O operations are performed, which could be intercepted by the layer responsible for encryption/decryption. That’s why encryption is implemented on MMap layer.

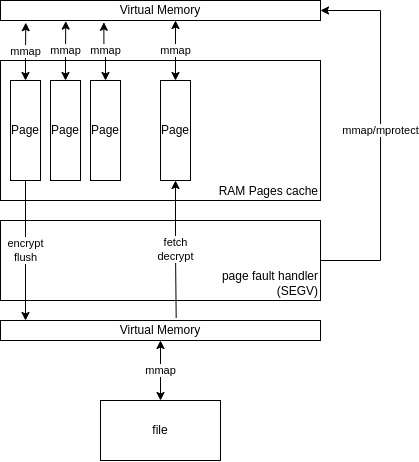

The diagram below shows a simplified view of relations with encryption introduced:

We introduced EncMMap class, which is the decorator of MMap, encapsulating all encryption details. The application sees the same memory access interface regardless of whether encryption is used.

The internal implementation of EncMMap is inspired by the project (probably a student’s project) description from Stanford University’s Computer Science Department. It is more a question than an answer but gives ideas about how a similar problem can be approached.

The diagram below shows more insights into what happens on which layer:

- RingBuffer files and off-page files can be huge (much bigger than available physical RAM). The whole file is visible to the client through the virtual memory. This implies that we cannot just allocate a huge RAM buffer from which we will provide pointers to the client and then somehow, periodically, in the background, encrypt it to the file.

- Instead of this, allocate a virtual memory region using mmap (VM1) of the same size as before (GCache size), but protect the whole region (PROT_NONE).

- Allocate the pool of physical memory pages much smaller than GCache size.

- Register signal handler on VM1 (SIGSEGV)

- mmap underlying file as it was done before (VM2)

How does it work?

The idea is to have a relatively small pool of RAM pages (encryption cache) that act as a backend for VM1. If the page from VM1 is not mapped to the physical memory from the cache, a free page from the cache is mapped. Encrypted content from underlying VM2 is fetched, decrypted, and stored on the cache’s page.

If there is no free page in the cache, existing pages, if changed, are encrypted and flushed to VM2, then mapped as needed.

Let’s see, case by case, how it works.

Case 1: No pages mapped to VM1, encryption cache empty

- The application makes access to page(N) of VM1

- OS calls the signal handler. The exact address that caused SIGSEGV is provided.

- If this is the address outsize VM1, proceed with the original signal handler function (it is not a SIGSEGV caused by access to VM1).

- If the VM1 page containing the address is PROT_NONE (meaning no cache buffer is mapped to it), it is read or write access.

- Allocate the physical memory page from the RAM pages cache pool.

- Fetch data from the corresponding page from VM2, and decrypt it to the allocated physical memory page.

- mmap physical memory page to the VM1’s page containing the address that caused SIGSEGV. mprotect it with PROT_READ.

- If the VM1 page containing the address is PROT_READ (it means it contains clean decrypted data), it is write access (if it were read access, it would not cause SIGSEGV)

- mprotect the page with PROT_READ | PROT_WRITE. From now on, the page is considered “dirty”.

Case 2: SIGSEGV occurs, page protection is PROT_NONE, no free physical pages (cache full)

- Iterate through mapped N pages trying to free some of them.

- If the page is marked as PROT_READ, unmap from VM1 and return to the pool

- If the page is marked as PROT_WRITE, it means the application has changed its content. Encrypt data and flush to the corresponding page of VM2. Then return to the pool.

- mprotect VM1 page with PROT_NONE

- Continue as in Case 1

Case 3: sync() requested

- Iterate through mapped pages

- If the page is marked as PROT_WRITE, encrypt data and flush to the corresponding page of VM2

- mprotect VM1 page with PROT_READ

- msync corresponding VM2 page

As we can see, RAM pages cache (aka encryption cache) was introduced. Depending on the resources available, we can control its parameters, such as the overall size and the page size.

gcache.encryption_cache_page_size / allocator.encryption_cache_page_size – These parameters control the size of the encryption page. If the size is lower, there will be more pages in the cache.

The smaller the page is, the quicker encryption/decryption happens, but also page swaps may happen more often.

If the page is bigger, encryption/decryption is slower, but if the application operates on relatively the same region of the memory, there is no need for swapping pages

gcache.encryption_cache_size / allocator.encryption_cache_size – These parameters control the size of the physical RAM used by the encryption cache.

What about encryption keys?

Every file is encrypted with its file key. Off-pages are short-living files, so that’s enough. The file key is not stored anywhere for them. However, this is not the case for GCache RingBuffer file. We also need to be able to decrypt the RingBuffer file after the server restart, so its encryption key has to be stored somewhere.

The file key is encrypted with the master key and then stored in the RingBuffer file itself in its header. The master key is stored in the server’s Keyring Component (that is why we need to configure the Keyring Component).

The master key can be rotated at any time by executing

|

1 |

ALTER INSTANCE ROTATE GCACHE MASTER KEY; |

The deep reencryption of the RingBuffer file can be achieved by deleting GCache RingBuffer file and restarting the server. The new file key will be generated.

Yet another thing worth mentioning is that if the Keyring File component is used for testing purposes, the keyring file has to be stored outside of the data directory. This is because the SST script would delete this file in another case. RingBuffer master key is generated at the very beginning of Galera initialization when RingBuffer file is created, and then SST happens, and we need the master key to survive the SST.

This blog post explains all the details related to GCache and Record-Set cache encryption to the extent that allows making a conscious decision if you need to encrypt them and what happens when you enable encryption.

MySQL Performance Tuning is an essential guide covering the critical aspects of MySQL performance optimization.

Unlock the full potential of your MySQL database today!