Group replication is a fault-tolerant/highly available replication topology that ensures if the primary node goes down, one of the other candidates or secondary members takes over so write and read operations can continue without any interruptions.

However, there are some scenarios where, due to outages, network partitions, or database crashes, the group membership could be broken, or we end up with isolated members. In those situations, we have to perform either a partial or full recovery to make the whole topology active again.

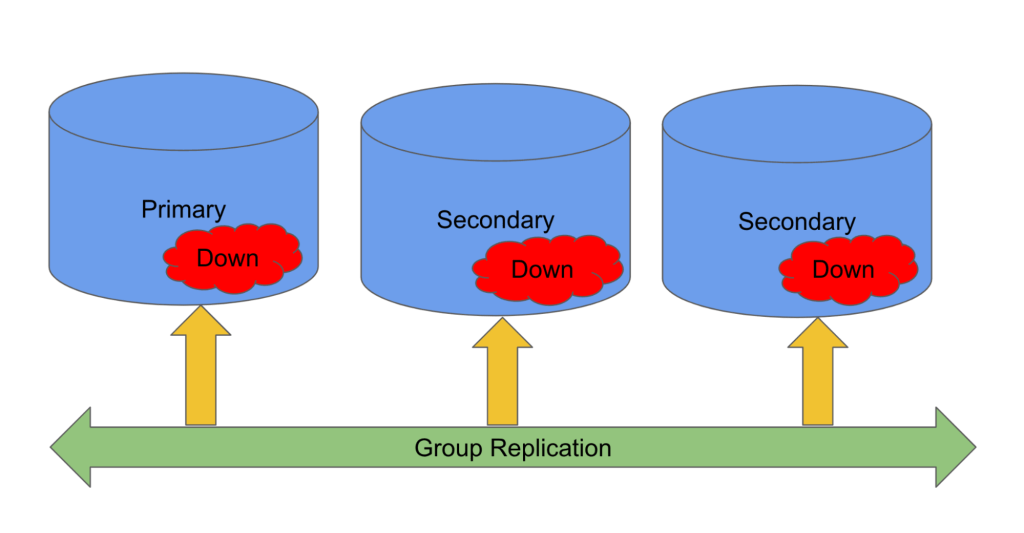

Let’s consider the scenario below: All the group nodes are down while the Read/Write operations are ongoing on the Primary member [Node1].

1) We have the group replication topology below, which I set up using the dbdeployer tool.

| 1 2 3 4 5 6 7 8 9 | node1 [localhost:23637] {root} ((none)) > select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | 00023637-1111-1111-1111-111111111111 | 127.0.0.1 | 23637 | ONLINE | PRIMARY | 8.0.36 | XCom | | group_replication_applier | 00023638-2222-2222-2222-222222222222 | 127.0.0.1 | 23638 | ONLINE | SECONDARY | 8.0.36 | XCom | | group_replication_applier | 00023639-3333-3333-3333-333333333333 | 127.0.0.1 | 23639 | ONLINE | SECONDARY | 8.0.36 | XCom | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ 3 rows in set (0.01 sec) |

2) Running workload on the primary [127.0.0.1:23637].

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 | sysbench --db-driver=mysql --mysql-user=sbtest_user --mysql-password=Sbtest@2022 --mysql-db=sbtest --mysql-host=127.0.0.1 --mysql-port=23637 --tables=10 --table-size=10000 --create_secondary=off --threads=50 --time=0 --events=0 --report-interval=1 /opt/homebrew/Cellar/sysbench/1.0.20_6/share/sysbench/oltp_read_write.lua run |

Output:

| 1 2 3 4 | [ 1s ] thds: 50 tps: 1726.75 qps: 35178.95 (r/w/o: 24710.96/6964.73/3503.26) lat (ms,95%): 0.00 err/s: 0.00 reconn/s: 0.00 [ 2s ] thds: 50 tps: 2528.19 qps: 50605.04 (r/w/o: 35405.76/10142.90/5056.39) lat (ms,95%): 0.00 err/s: 0.00 reconn/s: 0.00 [ 3s ] thds: 50 tps: 2316.05 qps: 46257.26 (r/w/o: 32348.96/9290.15/4618.15) lat (ms,95%): 0.00 err/s: 0.00 reconn/s: 0.00 ... |

3) Here, we have deliberately stopped all the group nodes.

| 1 2 3 4 5 6 7 8 | ./stop_all # executing 'stop' on /Users/aniljoshi/sandboxes/group_msb_8_0_36 executing 'stop' on node3 stop /Users/aniljoshi/sandboxes/group_msb_8_0_36/node3 executing 'stop' on node2 stop /Users/aniljoshi/sandboxes/group_msb_8_0_36/node2 executing 'stop' on node1 stop /Users/aniljoshi/sandboxes/group_msb_8_0_36/node1 |

At this stage, the cluster is completely down, and all group members have stopped. Now, we will try to recover the cluster again.

Bootstrapping/recovering cluster nodes

4) First, let’s start each node. Since I am using dbdeployer, I can manage(start/stop) or do other stuff with some internal command/scripts below.

| 1 2 3 4 5 6 7 8 | shell> ./start_all # executing 'start' on /Users/aniljoshi/sandboxes/group_msb_8_0_36 executing "start" on node 1 ... sandbox server started executing "start" on node 2 .. sandbox server started executing "start" on node 3 .. sandbox server started |

5) Verify the current status by connecting to each node.

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | node1 [localhost:23637] {root} ((none)) > select * from performance_schema.replication_group_members; +---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | | | NULL | OFFLINE | | | | +---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+ 1 row in set (0.00 sec) node2 [localhost:23638] {root} ((none)) > select * from performance_schema.replication_group_members; +---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | | | NULL | OFFLINE | | | | +---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+ 1 row in set (0.01 sec) node3 [localhost:23639] {root} ((none)) > select * from performance_schema.replication_group_members; +---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | | | NULL | OFFLINE | | | | +---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+ 1 row in set (0.01 sec) |

All of the nodes are offline at the moment.

6) We can recover the cluster by bootstrapping one of the group nodes, and then, after starting the other nodes, they will join via the distributed recovery process. But before that, it’s important to know the status of the transactions certified/committed on each node so we don’t end up bootstrapping the wrong node. Bootstrapping the wrong node can result in extreme data loss!

Note: “received_transaction_set” is empty in our case because all the nodes restarted again. However if the nodes were up and only GR is broken due to network distruption or some other event then we can observe the values in this section as well.

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 | ode1 [localhost:23637] {root} ((none)) > SELECT @@GLOBAL.GTID_EXECUTED -> ; +-----------------------------------------------+ | @@GLOBAL.GTID_EXECUTED | +-----------------------------------------------+ | 00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-148034 | +-----------------------------------------------+ 1 row in set (0.00 sec) node1 [localhost:23637] {root} ((none)) > SELECT received_transaction_set FROM -> performance_schema.replication_connection_status WHERE -> channel_name="group_replication_applier"; +--------------------------+ | received_transaction_set | +--------------------------+ | | +--------------------------+ 1 row in set (0.01 sec) node2 [localhost:23638] {msandbox} ((none)) > SELECT @@GLOBAL.GTID_EXECUTED; +----------------------------------------------+ | @@GLOBAL.GTID_EXECUTED | +----------------------------------------------+ | 00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-96208 | +----------------------------------------------+ 1 row in set (0.00 sec) node2 [localhost:23638] {msandbox} ((none)) > SELECT received_transaction_set FROM -> performance_schema.replication_connection_status WHERE -> channel_name="group_replication_applier"; +--------------------------+ | received_transaction_set | +--------------------------+ | | +--------------------------+ 1 row in set (0.01 sec) node3 [localhost:23639] {msandbox} ((none)) > SELECT @@GLOBAL.GTID_EXECUTED; +----------------------------------------------+ | @@GLOBAL.GTID_EXECUTED | +----------------------------------------------+ | 00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-76208 | +----------------------------------------------+ 1 row in set (0.00 sec) node3 [localhost:23639] {msandbox} ((none)) > SELECT received_transaction_set FROM -> performance_schema.replication_connection_status WHERE -> channel_name="group_replication_applier"; +--------------------------+ | received_transaction_set | +--------------------------+ | | +--------------------------+ 1 row in set (0.00 sec) |

From the gtid_executed information here it is clear that Node1(“00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-148034”) has the latest GTID coordinates so we should bootstrap this node.

Please note: When you have collected the transaction sets from all the group members, compare them to find which member has the biggest transaction set overall, including both the executed transactions (gtid_executed) and the certified transactions (on the group_replication_applier channel). You can do this manually by looking at the GTIDs, or you can compare the GTID sets using stored functions (GTID_SUBSET & GTID_SUBSTRACT).

| 1 2 | mysql> SELECT @@GTID_SUBSET(); mysql> SELECT @@GTID_SUBSTRACT(); |

7) Let’s start the bootstrap process on Node1.

| 1 2 3 | mysql> SET GLOBAL group_replication_bootstrap_group=ON; mysql> START GROUP_REPLICATION; mysql> SET GLOBAL group_replication_bootstrap_group=OFF; |

Now, if we check the status on Node1, it will reflect that the member is online.

| 1 2 3 4 5 6 7 | node1 [localhost:23637] {msandbox} ((none)) > select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | 00023637-1111-1111-1111-111111111111 | 127.0.0.1 | 23637 | ONLINE | PRIMARY | 8.0.36 | XCom | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ 1 row in set (0.00 sec) |

8) Now, we can start the other nodes by simply starting the group replication. The node is joined by the Distributed Recovery Mechanism based on the state transfer from the available Binary logs on the source or by doing a full cloning of data via Clone Plugin if the binary logs do not exist anymore on any of the available group nodes.

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | node2 [localhost:23638] {msandbox} ((none)) > start group_replication; node2 [localhost:23638] {root} ((none)) > select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | 00023637-1111-1111-1111-111111111111 | 127.0.0.1 | 23637 | ONLINE | PRIMARY | 8.0.36 | XCom | | group_replication_applier | 00023638-2222-2222-2222-222222222222 | 127.0.0.1 | 23638 | ONLINE | SECONDARY | 8.0.36 | XCom | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ 2 rows in set (0.00 sec) node3 [localhost:23639] {root} ((none)) > start group_replication; Query OK, 0 rows affected (1.36 sec) node3 [localhost:23639] {root} ((none)) > select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | 00023637-1111-1111-1111-111111111111 | 127.0.0.1 | 23637 | ONLINE | PRIMARY | 8.0.36 | XCom | | group_replication_applier | 00023638-2222-2222-2222-222222222222 | 127.0.0.1 | 23638 | ONLINE | SECONDARY | 8.0.36 | XCom | | group_replication_applier | 00023639-3333-3333-3333-333333333333 | 127.0.0.1 | 23639 | ONLINE | SECONDARY | 8.0.36 | XCom | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ 3 rows in set (0.00 sec) |

Note: If the binary logs somehow rotated or purged then we need to restore from the backups or alternatively we can set the Clone plugin which will automatically perform the full snapshot process.

Also, we can verify if the node is still doing any distributed recovery by executing the command below. When the recovery is complete, and the node shows “SECONDARY” status, the replication process [Slave_IO_Running/Slave_SQL_Running] will be stopped.

| 1 | node2 [localhost:23638] {root} ((none)) > SHOW SLAVE STATUS FOR CHANNEL 'group_replication_recovery'G; |

At this stage, we have a fully running cluster now.

Recovery from the backups

In rare situations when the data is completely corrupted, we want to do some partial recovery, or we want to add new nodes, we can directly perform backup/restore activity.

Let’s try to understand it using the scenario below. Here, we will try to bring back the cluster with the physical backup [Percona XtraBackup].

1) We took a backup from one of the group nodes for the demo purpose.

| 1 | shell> xtrabackup --host=127.0.0.1 -usbtest_user -pSbtest@2022 -P23637 --backup --target-dir=/Users/aniljoshi/backup/ |

Output:

| 1 2 3 | ... 2024-08-03T19:54:50.102188+05:30 0 [Note] [MY-011825] [Xtrabackup] Transaction log of lsn (266657170) to (266657878) was copied. 2024-08-03T19:54:50.329784+05:30 0 [Note] [MY-011825] [Xtrabackup] completed OK! |

2) In order to make that backup useful, let’s prepare it as well.

| 1 | shell> xtrabackup --prepare --use-memory=2G --target-dir=/Users/aniljoshi/backup/ |

Output:

| 1 2 3 | ... 2024-08-03T19:58:00.432140+05:30 0 [Note] [MY-012980] [InnoDB] Shutdown completed; log sequence number 266658326 2024-08-03T19:58:00.434701+05:30 0 [Note] [MY-011825] [Xtrabackup] completed OK! |

Now, considering we want to bring back the cluster with this fresh data.

3) First, we will clean the data directory of the target member [Node1].

| 1 | shell> rm -rf /Users/aniljoshi/sandboxes/group_msb_8_0_36/node1/data/ |

4) Then, we will copy the prepared data in the Node1 data directory.

| 1 | shell> cp -aR /Users/aniljoshi/backup/ /Users/aniljoshi/sandboxes/group_msb_8_0_36/node1/data/ |

5) Bootstrap the Node [Node1].

| 1 2 3 4 5 6 7 8 9 10 11 | mysql> SET GLOBAL group_replication_bootstrap_group=ON; mysql> START GROUP_REPLICATION; mysql> SET GLOBAL group_replication_bootstrap_group=OFF; node1 [localhost:23637] {root} (performance_schema) > select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | 11400bec-51a6-11ef-b950-29b40b1b64a7 | 127.0.0.1 | 23637 | ONLINE | PRIMARY | 8.0.36 | XCom | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ 1 row in set (0.00 sec) |

The other nodes could be joined via the distributed recovery/ cloning process (if already set); however, here we will demonstrate how we can perform the restore/recovery by using the backups.

6) Let’s take a fresh backup from Node1 and remember the GTID execution information.

| 1 | shell> xtrabackup --host=127.0.0.1 -usbtest_user -pSbtest@2022 -P23637 --backup --target-dir=/Users/aniljoshi/backup/Primary/ |

Output:

| 1 2 3 | ... 2024-08-03T20:16:36.182978+05:30 0 [Note] [MY-011825] [Xtrabackup] Transaction log of lsn (266723771) to (266724479) was copied. 2024-08-03T20:16:36.408616+05:30 0 [Note] [MY-011825] [Xtrabackup] completed OK! |

GTID execution details:

| 1 2 | shell> cat /Users/aniljoshi/backup/Primary/xtrabackup_binlog_info mysql-bin.000006 193 00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-148040 |

7) Next, prepare the backup.

| 1 | shell> xtrabackup --prepare --use-memory=2G --target-dir=/Users/aniljoshi/backup/Primary/ |

Output:

| 1 2 3 4 5 | ... 2024-08-03T20:17:47.817955+05:30 0 [Note] [MY-013072] [InnoDB] Starting shutdown... 2024-08-03T20:17:47.822229+05:30 0 [Note] [MY-013084] [InnoDB] Log background threads are being closed... 2024-08-03T20:17:47.865162+05:30 0 [Note] [MY-012980] [InnoDB] Shutdown completed; log sequence number 266724886 2024-08-03T20:17:47.867836+05:30 0 [Note] [MY-011825] [Xtrabackup] completed OK! |

8) Clean the Node2 data directory and copy the prepared backup on Node2.

| 1 | shell> cp -aR /Users/aniljoshi/backup/Primary/ /Users/aniljoshi/sandboxes/group_msb_8_0_36/node2/data/ |

9) Once the service is restarted, we can verify whether gtid_purged is applied or not.

| 1 2 3 4 5 6 7 | node2 [localhost:23638] {root} ((none)) > show global variables like '%gtid_purged%'; +---------------+-----------------------------------------------+ | Variable_name | Value | +---------------+-----------------------------------------------+ | gtid_purged | 00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-148040 | +---------------+-----------------------------------------------+ 1 row in set (0.01 sec) |

Otherwise, we can set the gtid_purged with the help of the below command.

| 1 | MySQL> set global gtid_purged="00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-148040 "; |

10) Finally, we can establish the replication and initiate the GR process.

| 1 2 3 4 5 6 7 8 9 10 11 12 13 | node2 [localhost:23638] {root} ((none)) > change master to master_user=rsandbox,master_password='rsandbox’ FOR CHANNEL 'group_replication_recovery'; node2 [localhost:23638] {root} ((none)) > start group_replication; Query OK, 0 rows affected (1.97 sec) node2 [localhost:23638] {root} ((none)) > select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | 11400bec-51a6-11ef-b950-29b40b1b64a7 | 127.0.0.1 | 23637 | ONLINE | PRIMARY | 8.0.36 | XCom | | group_replication_applier | c2b74588-51a7-11ef-81da-5f8da38bd06f | 127.0.0.1 | 23638 | ONLINE | SECONDARY | 8.0.36 | XCom | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ 2 rows in set (0.00 sec) |

Similar steps can be performed for subsequent nodes [Node3] as well. Moreover, in the palace of Percona XtraBackup, we can also use some logical backup/restore way like [mydumper/mysqldump/MySQL shell, etc.] to perform the recovery and establish the GR process. We are not going to discuss them here; however, the process is usually the same as we do for any async replication setup. Once the restoration completes with the expected gtid coordinates, then we can execute the below command.

| 1 2 | MySQL> change master to master_user=rsandbox,master_password='rsandbox’ FOR CHANNEL 'group_replication_recovery'; MySQL> start group_replication; |

Moreover, since MySQL 8.0.17 clone plugin is available which can be used for the remote cloning operations for distributed recovery in a Group Replication. This requires a bit of additional configuration and steps to be executed..

Recovery via cloning

Need to execute on Donor node[Node1]:

1) Dynamically installing the plugin.

| 1 2 | node1 [localhost:23637] {root} ((none)) > install plugin clone soname 'mysql_clone.so'; Query OK, 0 rows affected (0.16 sec) |

2) Persist the changes on the database.

| 1 2 3 | [mysqld] plugin-load-add=mysql_clone.so clone=FORCE_PLUS_PERMANENT |

3) Grant BACKUP_ADMIN privilege to the replication user – “rsandbox” which is responsible for the Distributed recovery process.

| 1 2 3 4 5 6 7 8 9 | node1 [localhost:23637] {root} ((none)) > show grants for rsandbox@'%'; +---------------------------------------------+ | Grants for rsandbox@% | +---------------------------------------------+ | GRANT USAGE ON *.* TO `rsandbox`@`%` | | GRANT BACKUP_ADMIN ON *.* TO `rsandbox`@`%` | | GRANT `R_REPLICATION`@`%` TO `rsandbox`@`%` | +---------------------------------------------+ 3 rows in set (0.00 sec) |

4) Verify the plugin status.

| 1 2 3 4 5 6 7 | node3 [localhost:23639] {root} ((none)) > select plugin_name,plugin_status from information_schema.plugins where plugin_name='clone'; +-------------+---------------+ | plugin_name | plugin_status | +-------------+---------------+ | clone | ACTIVE | +-------------+---------------+ 1 row in set (0.00 sec) |

Need to execute on Recipient node [Node3]:

1) Dynamically installing the plugin.

| 1 2 | node1 [localhost:23637] {root} ((none)) > install plugin clone soname 'mysql_clone.so'; Query OK, 0 rows affected (0.16 sec) |

2) Persist the changes on the database.

| 1 2 3 | [mysqld] plugin-load-add=mysql_clone.so clone=FORCE_PLUS_PERMANENT |

3) Verify the plugin status.

| 1 2 3 4 5 6 7 | node3 [localhost:23639] {root} ((none)) > select plugin_name,plugin_status from information_schema.plugins where plugin_name='clone'; +-------------+---------------+ | plugin_name | plugin_status | +-------------+---------------+ | clone | ACTIVE | +-------------+---------------+ 1 row in set (0.00 sec) |

4) Define the threshold for clone distributed recovery on all concerned/group nodes. The node will use the incremental data sync unless the transaction gap crosses the threshold below, in which case the data cloning process will run. For this test, we took a very small value, although the default is quite big.

| 1 2 | node3 [localhost:23637] {root} ((none)) > set global group_replication_clone_threshold = 10; Query OK, 0 rows affected (0.00 sec) |

5) Finally, start Node3. In the MySQL logs, we can see that the distributed recovery via the clone plugin has started. This process will replace or clone the whole data directory from the Donor/Source [Node1].

| 1 2 3 4 5 6 | 2024-08-03T18:21:04.039835Z 0 [System] [MY-013471] [Repl] Plugin group_replication reported: 'Distributed recovery will transfer data using: Cloning from a remote group donor.' 2024-08-03T18:21:03.033878Z 0 [Warning] [MY-013469] [Repl] Plugin group_replication reported: 'This member will start distributed recovery using clone. It is due to the number of missing transactions being higher than the configured threshold of 10.' 2024-08-03T18:21:04.150730Z 132 [Warning] [MY-013460] [InnoDB] Clone removing all user data for provisioning: Started ... /Users/aniljoshi/opt/mysql/8.0.36/bin/mysqld: Shutdown complete (mysqld 8.0.36) MySQL Community Server - GPL. 2024-08-03T18:21:09.6NZ mysqld_safe mysqld restarted |

6) Next, we can track the GTID execution and coordinate information using the file and command below.

| 1 2 3 4 5 6 7 8 9 | shell> cat #view_status 2 1 1722709264045254 1722709271116851 127.0.0.1:23637 0 ./mysql-bin.000006 4836 00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-148056 |

OR

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | node3 [localhost:23639] {root} ((none)) > select * from performance_schema.clone_statusG; *************************** 1. row *************************** ID: 1 PID: 0 STATE: Completed BEGIN_TIME: 2024-08-03 23:51:04.045 END_TIME: 2024-08-03 23:51:11.117 SOURCE: 127.0.0.1:23637 DESTINATION: LOCAL INSTANCE ERROR_NO: 0 ERROR_MESSAGE: BINLOG_FILE: mysql-bin.000006 BINLOG_POSITION: 4836 GTID_EXECUTED: 00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-148056 1 row in set (0.00 sec) node3 [localhost:23639] {root} ((none)) > show variables like 'gtid_purged'; +---------------+-----------------------------------------------+ | Variable_name | Value | +---------------+-----------------------------------------------+ | gtid_purged | 00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-148056 | +---------------+-----------------------------------------------+ 1 row in set (0.01 sec) |

Otherwise, we can set the gtid_purged with the below command by passing the grid coordinates from the clone status above.

| 1 | node3 [localhost:23639] {root} ((none)) > set global gtid_purged="00023636-bbbb-cccc-dddd-eeeeeeeeeeee:1-128056 "; |

7) Running CHANGE REPLICATION command and initiating group replication.

| 1 2 3 4 5 | node3 [localhost:23639] {root} ((none)) > CHANGE REPLICATION SOURCE TO SOURCE_USER='rsandbox', SOURCE_PASSWORD='rsandbox' FOR CHANNEL 'group_replication_recovery'; Query OK, 0 rows affected, 2 warnings (0.01 sec) node3 [localhost:23639] {root} ((none)) > start group_replication; Query OK, 0 rows affected (10.69 sec) |

8) Finally, we can see Node3 showing in the group replication topology.

| 1 2 3 4 5 6 7 8 9 | node3 [localhost:23639] {root} ((none)) > select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ | group_replication_applier | 00023639-3333-3333-3333-333333333333 | 127.0.0.1 | 23639 | ONLINE | SECONDARY | 8.0.36 | XCom | | group_replication_applier | 11400bec-51a6-11ef-b950-29b40b1b64a7 | 127.0.0.1 | 23637 | ONLINE | PRIMARY | 8.0.36 | XCom | | group_replication_applier | c2b74588-51a7-11ef-81da-5f8da38bd06f | 127.0.0.1 | 23638 | ONLINE | SECONDARY | 8.0.36 | XCom | +---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+ 3 rows in set (0.01 sec) |

Wrap-up

In this blog post, we explored ways to recover the group replication from some of the failures. We also discussed other approaches, like backups via Percona XtraBackup or the clone plugin process, which facilitates the disturbed recovery/data sync-up among the group nodes.

MySQL Performance Tuning is an essential guide covering the critical aspects of MySQL performance optimization.

Download the guide and unlock the full potential of your MySQL database today!