One doesn’t have to look far to see that there is strong interest in MongoDB compression. MongoDB has an open ticket from 2009 titled “Option to Store Data Compressed” with Fix Version/s planned but not scheduled. The ticket has a lot of comments, mostly from MongoDB users explaining their use-cases for the feature. For example, Khalid Salomão notes that “Compression would be very good to reduce storage cost and improve IO performance” and Andy notes that “SSD is getting more and more common for servers. They are very fast. The problems are high costs and low capacity.” There are many more in the ticket.

In prior blogs we’ve written about significant performance advantages when using Fractal Tree Indexes with MongoDB. Compression has always been a key feature of Fractal Tree Indexes. We currently support the LZMA, quicklz, and zlib compression algorithms, and our architecture allows us to easily add more. Our large block size creates another advantage as these algorithms tend to compress large blocks better than small ones.

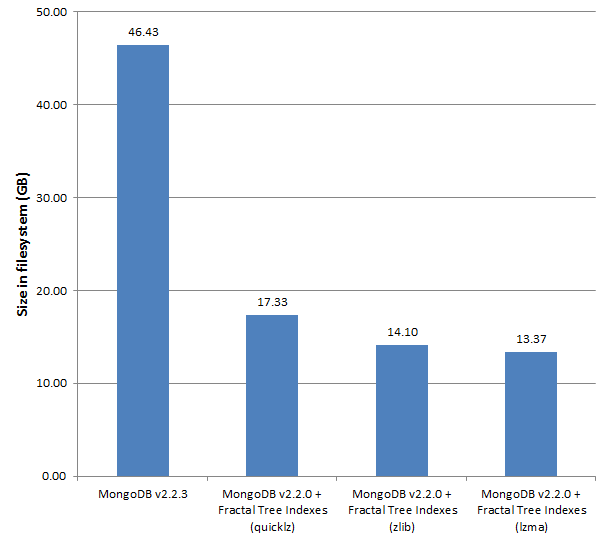

Given the interest in compression for MongoDB and our capabilities to address this functionality, we decided to do a benchmark to measure the compression achieved by MongoDB + Fractal Tree Indexes using each available compression type. The benchmark loads 51 million documents into a collection and measures the size of all files in the file system (–dbpath).

The structure of each document is as follows:

|

1 2 3 4 5 6 7 8 |

{"URI" : "ilabdoor5981234" , "name" : "weather78123123", "origin" : "core-mon2341341", "creation" : <nanotime>, "expiration" : <nanotime>, "data" : "tokutek", "randomStrings" : <256 bytes of random 5-character strings each ending in space>} |

If you’d like to run it yourself, the benchmark application is available here.

Benchmark Results

Compared to MongoDB’s file system size (46.43GB), our quicklz implementation is 62.68% smaller (17.33GB), zlib is 69.69% smaller (14.10GB), and lzma is 71.20% smaller (13.37GB).

The obvious benefit of high compression is smaller on-disk size: buy less disk/flash, faster file system backups, smaller EC2 instances. Not so obvious is IO efficiency. Since all reads and writes utilize compressed data then each IO is more efficient, often performing 5x to 20x the work of an uncompressed IO operation.

We will continue to share our results with the community and get people’s thoughts on applications where this might help, suggestions for next steps, and any other feedback. Please drop us a line if you are interested in becoming a beta tester.

We’re at Strata this week in the Innovation Pavilion. Please swing by to learn more if you are there.

MongoDB is released under AGPL license, where is the source code for your version of MongoDB + Fractal Tree Indexes?

The sources will be released when (and if) the binaries are released (or if we start running a mongodb service). As I understand the license, we don’t have to ship source code until then.

I think your test is grossly unfair …. to tokutek. There is no way that a mongodb site with moderate load is not going to blow up in size. Mongo needs to be compacted regularly, preferably nightly to keep its file size in check.

Brian, this is the first test of many to come, we just wanted to give an update on our efforts and started with a simple test. There are many use-cases that will achieve far greater compression than this one, and MongoDB’s fragmentation will increase that advantage even further.

Seconded! We see MongoDB DB files ballooning in size with a simple to reproduce, random write workload:

https://jira.mongodb.org/browse/SERVER-8078

Compression, and more sensible space reuse might remove the need for frequent compaction. Not sure why this doesn’t affect more people – certainly it’s problematic on SSDs.

I find this interesting i am not sure how it would work until i am able to look at the source. Even though theoretical all the nice things about this patch would be great but I am a little worried about how it will respond with disk fragmentation, the extra cpu load since mongodb uses single cpu core for write operations per logical database, and when there are a lot of small documents that may be very different then each other making it hard for compression to be effective and may cause more overhead in disk space.

Alexis, we will be running many more benchmarks in the coming months to demonstrate the compression and performance advantages that Fractal Tree Indexes bring to MongoDB. I’m always looking for publicly available benchmark applications for MongoDB, please pass along any you know of.

Simple python benchmark on:

https://jira.mongodb.org/browse/SERVER-8078

The normal storage engine can reproducibly use 14GB+ to store 1.6GB of data. It would be interesting to know if this performs better…

Thanks for the pointer, I’ll run tests and post the results here soon.

You can also make a benchmark for updating (increments for example) and reading using:

1.uncompressed mongodb where data>ram

2.compressed mongodb where data<ram

Dorian, those sound like good tests. Do you have any existing benchmark code you can share?

Tim, I have no code. But you can do random/zipifian read/update/write/in_query/range_query etc test.

To make it more perfect generate all the commands and save them in a file and read them in the benchmark?

I’m currently working on Sysbench for MongoDB, with results coming soon. It covers most of what you are describing.

Hello Tim,

Good read.Pretty exciting to see the fractal tree indexes race ahead when compared to their counterparts from mongodb. From a mongodb perspective, will these indexes be rolled out in the future as part of a mongo release or has it been already done or as mentioned earlier, is the license ready yet?

MongoDB 2.8 is adding support for alternative storage engines and we plan on being one of them.