Thinking about moving to PostgreSQL? You’re not the only one.

Maybe it’s the licensing fees your current database keeps hiking year after year. Maybe performance is starting to lag just when you need it most. Or maybe you’re just tired of asking permission to make changes. Whatever pushed you to this point, PostgreSQL probably feels like the right move… and it can be.

But that doesn’t mean it’s easy.

The challenge isn’t PostgreSQL itself; it’s everything that comes with the migration. Most teams underestimate what’s involved. It’s not just a technical switch. It’s a series of decisions about architecture, downtime, tooling, testing, and what happens the moment you go live.

So, let’s discuss what makes a PostgreSQL migration work and how to avoid the things that derail it.

Why PostgreSQL makes the shortlist for migration

It usually starts with a moment.

Another renewal contract for your current database shows up. A support ticket goes unresolved for too long. Someone pulls a cost report, and suddenly everyone’s asking why the database is eating half the budget.

And then someone says it: Should we just move to PostgreSQL?

Not a bad idea.

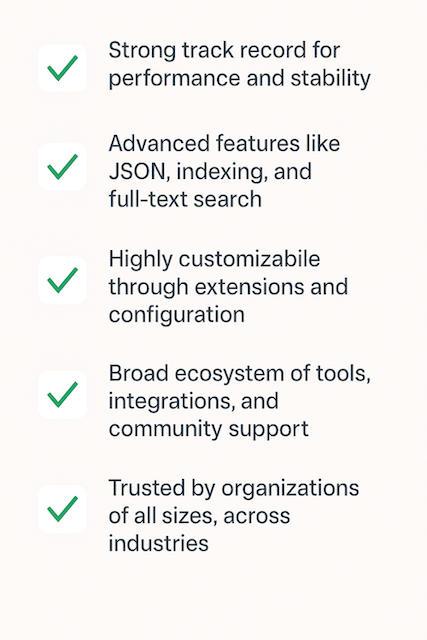

PostgreSQL stands out for all the right reasons:

The case for PostgreSQL is easy to make. The migration? That’s where things get interesting.

Migration planning is the part that (some) people skip

Most failed migrations don’t blow up during the cutover. They start falling apart weeks before that, during the part no one likes to talk about: planning.

It’s easy to treat a PostgreSQL migration like a technical to-do list. Move the data. Update the connection strings. Done.

But the reality? This is an architecture decision. A business continuity decision. A people decision. And if you don’t give it the time and attention it needs up front, you’re going to pay for it later, and usually at the worst possible time.

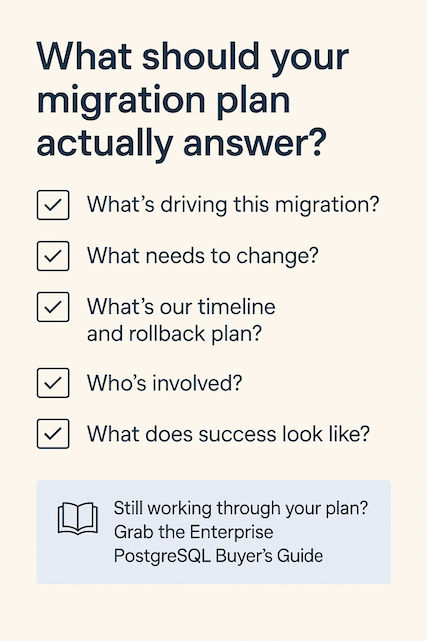

This is the part of the work that’s hard to retrofit. So before anything moves, get clear on the things that matter:

- What’s driving the migration? Is this about cost, flexibility, performance, compliance?

- What’s in your current system that might not map cleanly?

- What’s your actual migration window? What’s your fallback if something goes sideways?

- Who needs to be in the room, and what do they need to know?

- And, most importantly, what does success look like?

You don’t necessarily need a 40-slide migration plan, but you do need alignment, visibility, and a realistic understanding of what you’re getting into.

It’s not just about moving data

If you’ve never done a PostgreSQL migration before, it’s easy to assume it’s a cut-and-dry operation.

But anyone who’s been through it knows that’s not how it plays out.

A real migration has layers, and they all need attention.

First up: Schema conversion

This is about making sure your database structure makes sense on the other side. PostgreSQL has its own way of doing things. If you’re coming from Oracle, SQL Server, or another proprietary system, expect to deal with:

- Custom data types

- Table relationships

- Index strategies

- Stored procedures, packages, and triggers

Tools like pgloader, ora2pg, and AWS’s Schema Conversion Tool can help translate things for you. But manual clean-up is almost always part of the deal, especially when proprietary features don’t have a direct match.

Pros: You tackle compatibility early, which saves headaches later.

Cons: It can get messy fast, especially with complex logic or unique features.

Data migration

Once your schema is ready, it’s time to move the data itself. This is where downtime and data integrity become front and center.

There’s no one “right” method here. Just the right one for your size, complexity, and tolerance for disruption:

- Dump and restore: The classic. Simple, direct, and great for smaller databases. Run pg_dump, then pg_restore. You’ll need a maintenance window, but it works.

- Logical replication: Great for streaming changes from the source to the target PostgreSQL instance in near real-time. This drastically reduces downtime and gives you breathing room for testing. Tools like pglogical or built-in logical replication features make this easier.

- Physical replication: Best when you need to move a large amount of data and downtime is a dealbreaker. You’ll create a full physical copy using tools like pg_basebackup and pg_rewind, and keep the environments synced.

- Hybrid approaches: The most realistic choice for many teams. Some data moves offline, some replicates live, and some gets rebuilt fresh.

Common mistake to avoid: Picking a method before evaluating the realities of your data and applications. Don’t decide based on what sounds easiest; decide based on what’s actually doable.

Application migration

This is where teams often underestimate the lift.

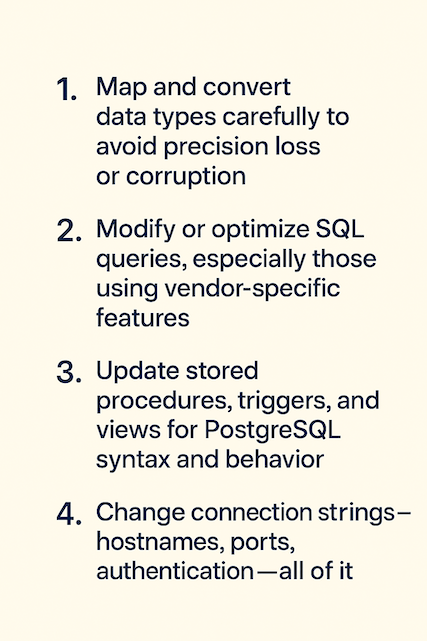

Moving your application to PostgreSQL isn’t just about flipping a connection string. It often means reworking how your application interacts with the database, especially if you’re coming from a system like Oracle or SQL Server.

Start by figuring out where your current setup might clash with how PostgreSQL does things. SQL dialects don’t always translate cleanly. PostgreSQL might interpret functions or expressions differently, and stored procedures written for other systems often need to be rewritten from the ground up. Even your data types, things like timestamps, numerics, or custom types, may not map one-to-one. It’s not just about syntax; it’s about making sure your app behaves the same way it did before, or better.

You’ll need to:

In addition, testing can’t be an afterthought here. Unit tests, integration tests, and performance checks should be part of the process from the start. It’s how you validate that everything works as expected, not just at a systems level, but in terms of real-world app behavior.

And don’t wait until go-live to involve the application team. The earlier they’re engaged, the fewer surprises you’ll hit downstream. Small changes in sorting, pagination, and default values can ripple into major UX bugs if you’re not looking for them.

Tools that help (and what to watch for)

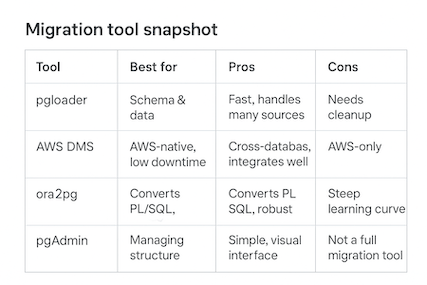

No tool replaces a smart migration strategy. But the right ones can make the work easier:

- pgloader: Automates schema and data migration. Handles many popular source systems.

- Pros: Fast, scriptable, and handles a lot of heavy lifting.

- Cons: Won’t catch edge cases. You’ll still need cleanup.

Migrating from MySQL to PostgreSQL? Make sure you have the right strategies in place. Get the guide

- AWS Database Migration Service (DMS): Best for teams already in AWS looking for minimal downtime.

- Pros: Works across heterogeneous systems, well-integrated with AWS tooling.

- Cons: Tied to AWS. Less ideal if you’re running PostgreSQL elsewhere.

- ora2pg: A favorite for Oracle-to-PostgreSQL migrations.

- Pros: Great for converting PL/SQL and handling complex schema work.

- Cons: Has a learning curve and requires tuning.

- pgAdmin: Not a migration tool per se, but helpful for managing imports, exports, and structure.

Each of these plays a role, but as much as you may want them to be, none of them is magic. The tool doesn’t make the migration; the team behind it does.

Don’t stop at cutover

Your database is “live.” Great!

But you’re not done.

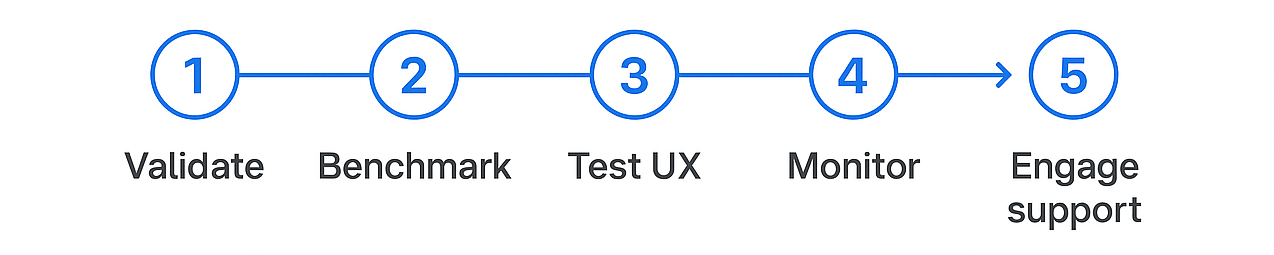

This is where reality sets in. A migration isn’t successful just because the lights came on. You need to prove it through testing, validation, monitoring, and ongoing support.

First up: trust, but verify. Just because the data is there doesn’t mean it’s right. Spot-check your tables. Confirm relationships. Make sure no rows got lost, no encodings broke, and nothing weird slipped through the cracks.

Next: benchmark your performance. Your new PostgreSQL setup should meet or exceed the expectations set by your old database. If it’s slower (or just different), you’ll want to know why. That means capturing baseline performance metrics and comparing them against your current load.

Then: test every application touchpoint. The connection strings may be correct, but that doesn’t mean your app logic behaves exactly the same way. Did pagination change? Are time zones handled differently? Are default values applying the same way? Now’s the time to find out before users do.

And once the dust settles, set up continuous monitoring. Tools like Percona Monitoring and Management (PMM) can help you track query performance, resource usage, and system health over time. Make sure you have alerting in place. Don’t wait for users to report issues.

Finally: engage your community. Whether it’s PostgreSQL forums, contributors, or professional support, PostgreSQL has an incredibly active ecosystem. Lean on it. Ask questions. Share what you’re seeing.

A migration doesn’t end at cutover. It ends when your new PostgreSQL environment is stable, monitored, and trusted by your systems, users, and team.

Why Percona is a top choice for PostgreSQL migrations

Planning a migration or already knee-deep in one? You don’t have to figure it all out yourself. Percona has helped countless teams move to PostgreSQL with less downtime, fewer surprises, and the right tools for production from day one.

Effortlessly Migrate to PostgreSQL

What you’re migrating to

Percona for PostgreSQL gives you more than just the database.

It’s a production-ready stack built for the real demands of enterprise environments. High availability, security, backup, and monitoring are already included; no guesswork, no third-party patchwork. And because it’s fully open source, you keep control over your deployment without the cost or lock-in of proprietary forks.

And you’re not doing it alone. Behind the software is a team of PostgreSQL experts who help assess your environment, design better architecture, and guide you through the entire migration, plus ongoing support when you’re in production.

Taking the self-managed route for your PostgreSQL migration? Read this first.

A PostgreSQL migration is absolutely worth it, but it’s not effortless. If you want expert help, we’re here for that. But if you’re planning to go the DIY route, we’ve got something for you, too.

PostgreSQL in the Enterprise: The Real Cost of Going DIY

It’s everything you need to know about DIY PostgreSQL so you can go in prepared, not guessing.

FAQs

What is the best way to migrate a PostgreSQL database?

The optimal way to migrate a PostgreSQL database is to use tools like pg_dump for exporting data from the source database and pg_restore for importing data into the target database. This method is reliable and allows for flexibility in managing database schemas and data. Planning, testing, and validation are also crucial steps in the migration process to ensure data integrity and system compatibility.

Why migrate to PostgreSQL?

Migrating to PostgreSQL offers several advantages, including robust data integrity, strong compliance with SQL standards, and extensive support for advanced data types and functionalities. Additionally, PostgreSQL’s open source nature ensures cost-effectiveness and a vibrant community for support. Its performance, scalability, and reliability make it an attractive option for businesses looking to leverage powerful database solutions.

What steps should you take to migrate to PostgreSQL?

- Assessment and planning: Evaluate your current database setup and define your migration goals.

- Schema migration: Use tools like

pg_dumpto export your database schema and apply it to the PostgreSQL environment. - Data migration: Migrate your data using

pg_dumpfor export andpg_restorefor import, or consider using more sophisticated tools for larger databases. - Testing: Rigorously test the migrated database for integrity, performance, and compatibility with applications.

- Optimization: Tune the PostgreSQL database for optimal performance based on the specific use cases.

- Deployment: Plan a cut-over to the new system with minimal downtime. Implement replication or other strategies if zero downtime is required.

- Maintenance and support: Ensure ongoing optimization, monitoring, and support post-migration.